How Much Do ICU Specialists Vary In Their Ability To Save Patients Lives?

Years ago, while doing an ICU Quality Improvement Project, I stumbled upon a method to quantitatively assess the life-saving skills of a group of ICU doctors. The amount they varied was shocking.

To the best of my knowledge, no study has ever been published in the medical literature with granular statistical comparisons of life-saving skills amongst a group of doctors.

This is an “orphan” study that I completed years ago but never ended up trying to publish. Why you ask? Well, I was hesitant for fear that it would generate discord and controversy (and maybe even embarrassment) amongst the ICU team that I was leading at the time. So I deferred it for a while and then Covid arrived and I left the institution soon after in protest over their initial Covid response of “supportive care only” (fluids, Tylenol, oxygen, ventilators). However, in my mind, it is the most interesting and thought-provoking study I have done in my career.

I think the best way to understand the import and context of what I found is to imagine if all doctors could be assigned a “Quarterback Rating” of skill like NFL quarterbacks are assessed by. From my Brave Browser AI:

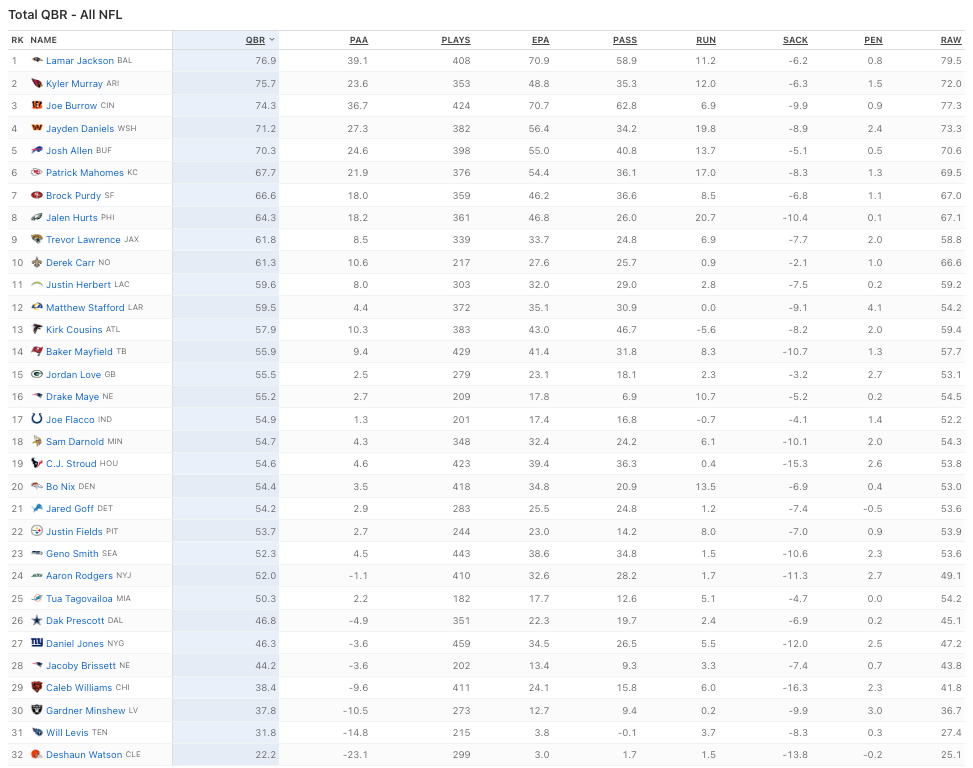

How much do quarterbacks vary in skill? Quite a lot as you can see below for this season, i.e. the highest rated QB has a score that is almost 4 times the score of the lowest rated quarterback (e.g. Deshaun Watson has a QBR of 22.2 while Lamar Jackson has a QBR of 76.9).

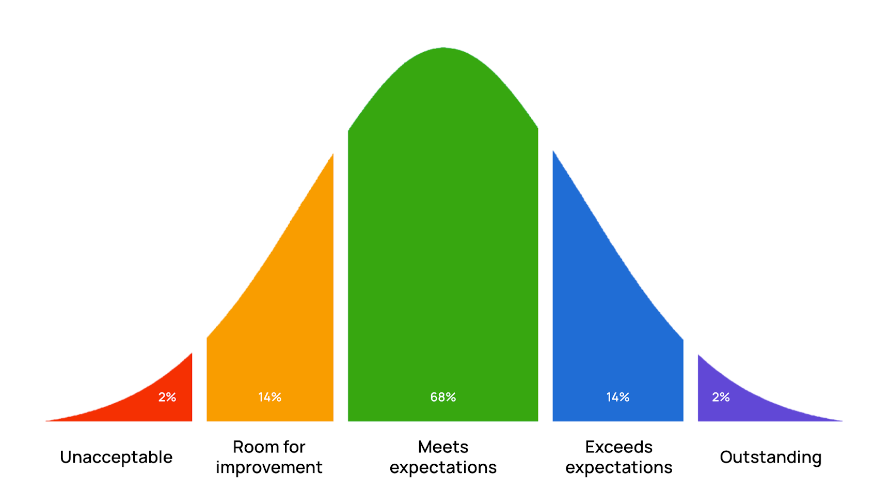

The QBR varies just like most human characteristics vary in that the distribution of ratings follow what is called a “normal” distribution, i.e. a “Bell” curve in that most fall close to the overall average of 55.5, while a handful of QB’s are “outliers” given they are either well above or well below that average as below:

Note the median QBR score of 54.9 is close to the average score of 55.5 which means that the QBR follows an almost perfectly “normal” distribution of skill. What bothers me about the current QBR rankings is that I am a sad, life-long, obsessive NY Jets fan and my favorite QB of all time, Aaron Rodgers, has a QBR this year ranked 24 out of 32 (which is, like everything related to the Jets, totally disappointing because Aaron actually holds the record for highest career QBR of 103.0).

Also know that ICU specialists are the closest thing to a “quarterback” among physician specialties given that we manage huge teams to care for patients - e.g. teams of residents, fellows, nurses, respiratory therapists, nutritionists, physical therapists, and most of all, consultants and proceduralists from other specialties. But we are the boss and we make all final treatment decisions regarding the patient. So, imagine if you could choose a doctor by similarly accurate quantitative metrics of skill? You could then choose “the best” doctor for whatever ailment or situation you needed!

However, currently, patients have very little ability to assess the true skill level of their doctor given that the metrics we rely on do very little in being able to accurately identify skill in producing the best outcome for the patient. Instead people tend to rely on the below imperfect metrics in choosing “the best doctor” as below:

Reputation:

Word of mouth - probably the strongest one. If someone you know had a good experience or outcome with a doctor or really likes their doctor in terms of personality/bedside manner, they will refer you to their doctor. But beside manner does not necessarily correlate with actual skill in diagnosing and treating.

Academic, i.e. # of publications, leadership positions held, Society positions held, Research funding, Teaching Awards etc (I have won several major Departmental teaching awards at different institutions).

Stature of the institution they work for or were trained by- i.e. “my doctor trained at the Mayo Clinic” or “My surgeon is the Chief of Surgery at UCLA” etc (I trained at St. George’s University in Grenada, West Indies which some have used against me).

Clinical - number of years in practice, size of practice, success of practice (how well marketed it is), kind of car the physician drives (status symbols)?

Awards - there is a magazine called “Top Doctor Magazine” where physicians in your community are surveyed or can just go ahead and nominate a colleague who they think is a “Top Doctor.” I used to win Top Doctor Awards when I was ICU director at the University of Wisconsin (UW) for instance. These awards can obviously be gamed if someone decides to campaign their colleagues for votes (or maybe even hire bots?) I know that UW would regularly encourage us to nominate a colleague for the award.

My favorite is the magazine you find on the back of airline seats which have headlines like “Best Dermatologist in the U.S” and you see a flashy picture of a super well dressed physician with an inviting smile. What makes him/her the best dermatologist is beyond me except for the amount they were able to afford for the ad placement.

Certification - i.e Specialty Board certifications etc. As a guy who just had all his revoked, you can guess at how important I think this is, but I can tell you that “the system” places a lot of importance on this distinction by requiring it for both insurance participation and hospital privileges. Problem: nearly all doctors are Board Certified so it does little to differentiate them at this point.

Malpractice History - using the National Providers Data Bank you can see how often a doctor has been successfully sued for malpractice etc. Problem: many doctors have never been successfully sued, and those that have does not mean necessarily that they are a bad doctor (but might).

Post-Op wound infection rates - this is probably the only truly statistical metric you can judge doctors by, but that only applies to surgeons which are a small percentage of all doctors (most illness is medical not surgical) and wound infection may not correlate with surgical skill.

Similarly, surgeons have to report their “re-operation” rates, which is probably the best metric to judge a surgeon by, however, this number is quite small and likely does not differentiate surgeons very broadly (although as a non-surgeon, I do not know that). What I do know is that data like these are not publicly available and are only for internal use by hospitals and departments where they might intervene when a surgeon “falls off the curve.”

Patient Complaints - problem here is that again, this is internal data to the hospital and not publicly available (but would be really good to know!)

Geography and Insurance/Schedule Availability - I think there are some patients who assume that doctors are generally equal in skill level and don’t really care about the doctors reputation so they will choose one based on who is closest geographically and/or most available on their insurance plan.

The problem is that most of the above metrics are “qualitative” (i.e. subjective) instead of being accurate or objective assessments of actual doctoring skills. Although many might think that qualitative assessments of skill correlate with the quantitative, at the risk of foreshadowing, I can tell you that what I found is that none of the above metrics correlated with patient outcomes. Literally none.

This is probably a good time to tell you of a unique method that I devised some years ago to help friends or family choose “the best” doctor locally:

I would call the nearest teaching hospital and ask the operator to page the “Chief Resident” of whatever specialty I was trying to find a doctor in (I would introduce myself simply as “Dr. Kory”). Know that a Chief Resident is a doctor who has completed their training but gets asked to stay on an extra year to help run the teaching program. It is an honor bestowed on a graduating doctor felt to be the “best trainee” in terms of overall qualities of knowledge, professionalism, empathy, teaching, and collaboration.

I would then simply explain to the Chief Resident that I had a family member recently move to the area and I wanted their help in referring my loved one to the “best” of whatever specialty they were in. I did this because Chief Residents have deep personal insight and observation of all of their teaching mentors and typically will give you one name within like 2 seconds. Bam, now you have the “best doctor” in the area, at least in terms of caring, knowledge, and empathy.

Anyway, let me give you some background into how this study came about. For those of you who know something about my career, recall that I was one of the national and international pioneers in a newly developed field called “Point of Care Ultrasound,” (POCUS) where we developed and taught ultrasound diagnostic skills to bedside physicians. I developed and ran the national training courses put on by the American College of Chest Physicians with my mentor Dr. Paul Mayo and then later became the senior editor of the best selling textbook in the field for its first two editions (I recently resigned from the 3rd edition since I have been unable to keep up with the field since my “excommunication” from ICU medicine). Anyway, below is one of my proudest career achievements given that it has been translated into 7 languages and is read across the world (although sold in paperback, it is linked to a “video book” version on-line where we included a massive library of video clips of all pertinent bedside diagnoses you can make with ultrasound):

I cannot tell you the hundreds of hours I put into editing that book (or the thousands learning the skill). We wrote the book to help doctors make life-saving or critical diagnoses rapidly and efficiently, i.e “at the point of care.” Knowing how to perform and interpret such exams on my own allowed me to bypass the need to put in an ultrasound order, await an ultrasound technician to perform an almost always unnecessarily comprehensive exam (for billing/reimbursement), transmit the images to the radiologist, and then wait for the radiologist to get to the study in their queue and then interpret and report and then send the report to the ordering doctor. Whew.

When someone is crashing in front of you, this process presents a massive obstacle to delivering accurate and often life-saving care in an efficient manner (especially when you are “swearing it out” at the bedside, oftentimes with anxious family members challenging you to find out what is wrong with their loved one). Remember that for any disease known to man, earlier treatment is always more effective than delayed treatment and that is never more true than in critical illness. Thus you can understand the immense importance of having an ultrasound trained doctor at your bedside, particularly (and almost exclusively) for those in the ICU.

Anyway, as an expert in the field I wondered how my POCUS skills were impacting my ordering of chest x-rays, CT scans, and formal ultrasound exams. So I set out to gather data that would allow me to compare my diagnostic testing use against the other ICU specialists on the Critical Care Service which I was the Chief of at the time.

Why does resource use matter? Well, beyond the cost savings and efficiency (and less radiation) of ordering less tests, know too that, in the case of CT scans for instance, moving a critically ill patient who is in multi-organ failure to the Radiology department incurs risks and a lot of labor resources such as requiring the presence of an ICU nurse, a transporter, a respiratory therapist and in rare cases an anesthesiologist or ICU doc for the whole time the patient is “off the unit” getting the scan. I have been called to CT scanners numerous times throughout my career for dangerous situations that developed during transport and/or the scan.

The ability to do this study suddenly occurred when the hospital purchased this really cool software called QlikView which was able to “data mine” Epic’s Electronic Medical Record. I was taught how to use the software and was also encouraged to try to perform some “quality improvement” projects.

Most important is that Qlikview allowed me to query how many times a doctor on my service had ordered something. An important point about this is that at the center I was working, ICU specialists worked one week at a time on the medical intensive care service, from Monday through Sunday. Each Monday, every patient was switched under the name of the doctor on service, and thus every single subsequent order placed for that patient that week was recorded under the name of the physician in charge.

Next, using the ICU schedule for the year I was able to calculate the total number of days they were on the ICU service that year. So, for instance, if I wanted to know how many times they ordered a chest x-ray, all I had to do was put in a period of time as well as a location (ICU), and then I put in a doctors name and the test I was interested in and I would get a total number of times that doctor ordered that test over that time period in the ICU. Thus with this software and the ICU specialist schedule, I was able to calculate the average number of times per each day on service that a doctor ordered a test or performed an intervention.

Armed with Qlikview, I first set out to answer the question of how much the 17 “intensivists” (ICU specialists) on my service differed in terms of resource use, not only in terms of radiologic tests ordered, but also things like the rate at which they consulted other specialists, the rate at which they ordered invasive catheters (I felt many of my colleagues “overused” these interventions), the rate at which their patients needed dialysis etc. Realize that dialysis use is more of a marker of quality of care than resource use because dialysis is not really a choice - it is only done when the kidneys have near completely failed so this metric is actually a proxy for how often patients under their care developed kidney failure.

As I was working on this above analyses, one day I discovered a method to compare each specialists “patient outcomes” (i.e. survival rates of their patients) as well. Know that, depending on the week or season and the ICU location (i.e. urban, rural, wealthy, poor), patient mortality rates range from 8-25% on average each month. At UW I saw rates of around 8-12% on average and in lower Manhattan earlier in my career, I saw rates between 14-25%.

The way in which I was able to assess and compare the life-saving skills of the ICU doctors was because every patient admitted to that ICU had a nurse which calculated their APACHE score. What is an APACHE score? Briefly, it is a widely used severity-of-disease classification system in intensive care units (ICUs). It assesses the severity of illness on the first day of ICU based on twelve physiologic measurements, age, and previous health conditions. Most important is that APACHE scores are used to predict the patients overall risk of dying and is based on outcomes data from tens of thousands of patients. It doesn’t predict the risk accurately for an individual patient, but it is highly accurate for a population of patients with that score. To wit:

An APACHE II score of 11-20 has a mortality rate of 28.45%

Patients with higher APACHE II scores (31-40) had a significantly higher mortality rate, and a score of 71 (the highest) have mortality rates above 50%.

Conversely, patients with lower APACHE II scores (3-10) had a 0% mortality rate (all survived).

Now, in this database, I could also look up to see which doctor the patient was admitted to. This is crucial, because it is well known that your prognosis is most determined by your response to treatments on your first day in the ICU. So, even if the patient was admitted to the doctor on a Sunday and thus they only had the patient for one day, I felt it valid to include as a marker of their skill. Plus, I did it the same way for everybody, so even if the doctor who took over the next day may have done something sub-optimal, this risk would be spread evenly across all.

Thus, with these data, I could:

Calculate the average APACHE score (and thus average estimated mortality rate) for all patients admitted to each ICU doctor over a period of time. I used as large a sample as I could which was a time period of 18 months.

In the same database I could also see whether the patient was alive or dead upon ICU discharge and also hospital discharge - i.e. some patients survive the ICU but not the hospital. Thus, I could calculate the actual “observed” mortality rate of patients for each ICU doctor.

With the two data points above, I was able to calculate what is called an O/E mortality ratio for each doctor (observed/expected deaths). The best doctors at saving lives will have a low O/E ratio and the worst will have the highest O/E ratio. For example, if the average predicted mortality rate for the patients on my service one year was 28% but the actual mortality rate that was observed was 22%, that is an O/E ratio of 0.78. Conversely, if the predicted mortality is 28% but 35% of my patients actually died, this will give you an O/E ratio over 1.0 (1.25 to be exact).

OK, now that you have some understanding of the background and methods of the study, lets move on to the results and analysis which I will present here.

*If you value the time and effort I put into researching and writing my posts, Op-Ed’s and pro-bono doctor defenses, support in the form of paid subscriptions would be appreciated.

It would help to get the Woke out of medicine (and in most other Institutions that society relies on). When my mother developed cancer recently, I spent a lot of time in the major hospital in my city, in cancer ward, in ICU. I was shocked, while there are compassionate and caring staff, there is also a lot of incredibly useless and uncaring and frankly dumb staff (nurses and doctors), gossiping, playing on their phones, not really taking the job seriously (nurses in ICU)!

The cancer went so fast, and when I realized all the doctors, oncologists, etc who popped in each day unannounced, saying they're working on this or that, a new test to determine what treatment do to, "oh don't worry, mom will be an out-patient soon, this is treatable" When the Feminist doctor came in, and basically said after all this and that, "we're not going to do any treatment, mom is too weak." I questioned them, begging to do something, an alternative treatment, when I said Ivermectin. The feminist doctor was so clearly focussed on what a strong women she was, talking down to me, lecturing me for questioning what they are doing (which was nothing). All she cared about was showing what a strong women she was. It broke my heart, what an awful system to allow this. In the end, they did nothing for mom expect give her super strong drugs to dull the pain. RIP to my beautiful and loving mom. You were smarter than all of them.

The ICU dr who refused to treat my mom with Ivermectin, refused to allow me to be with her and informed me “the next time I saw my mom she’d be doing chest compressions” is still with the hospital despite my complaints to the board. After 5 days under her non-care my mom chose Hospice over a ventilator and passed with her family in the room. I’m praying one day she’s held accountable.